ChatGPT is a popular chatbot and virtual assistant developed by OpenAI and has been on the market since November 30, 2022. This chart model allows you to fine-tune and steer a conversation toward the ideal duration, structure, tone, degree of detail, and language.

Fortunately, with the continuous advancements in AI, open-source ChatGPT Alternatives have emerged as powerful tools that provide the same conversational skills and additional benefits of customization and transparency.

In addition, the open-source nature of these ChatGPT alternatives empowers developers to tailor the models to their specific needs, unleashing their full potential in various software and fostering collaboration.

In this post, we’ve compiled the best open-source ChatGPT Alternatives, highlighting their cutting-edge features and benefits.

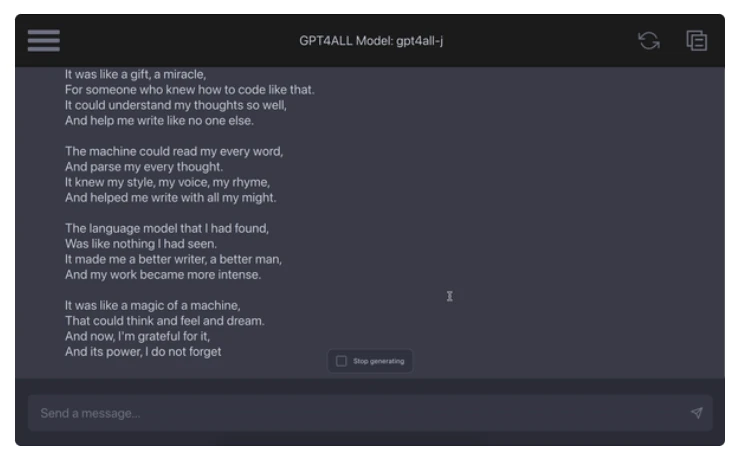

1. GPT4All

GPT4All is a free, state-of-the-art chatbot that executes locally and respects user privacy. GPU or an internet connection is not necessarily needed for the functionality of this tool.

GPT4All comes with a variety of features users can explore, including creating poems, responding to inquiries, and presenting customized writing assistance.

Its additional features include building Python code, comprehending documents, and even training your GPT4All models. On top of that, GPT4All is an open-source environment that lets you set up and execute large, customized language models locally on consumer-grade CPUs.

Whether you want an instruction-based model for more in-depth interactions or a chat-based model for quicker responses, this tool has you covered.

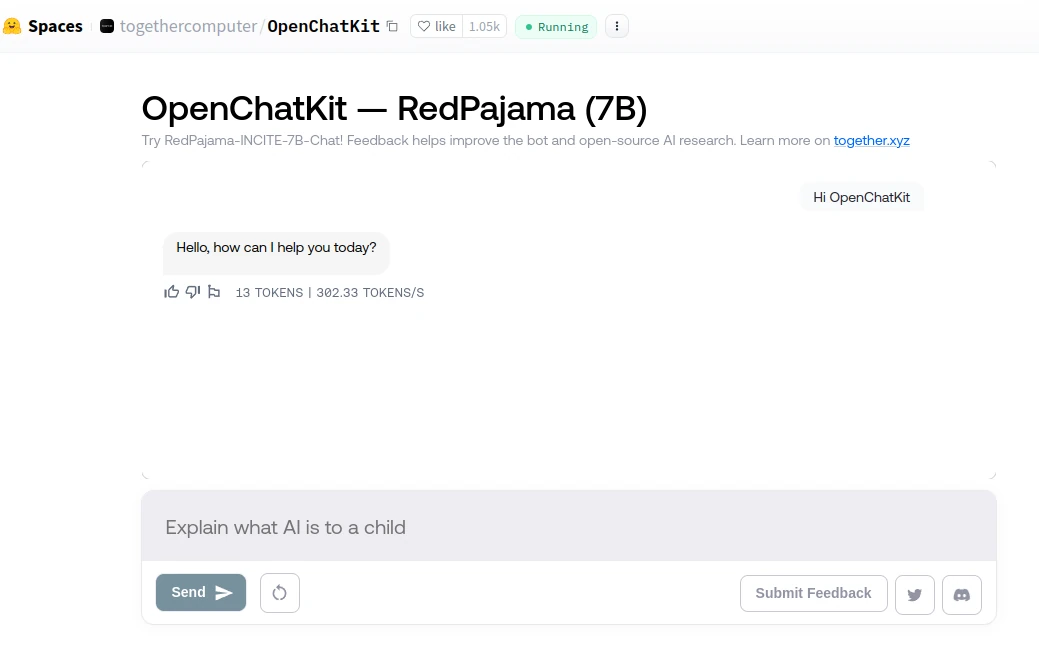

2. OpenChatKit

OpenChatKit is a fantastic ChatGPT alternative, offering individuals similar natural language processing (NLP) capabilities while allowing more flexibility and control.

The tool allows users to train and fine-tune their models to fit specific use cases, as it is built on EleutherAI’s GPT-NeoX framework.

OpenChatKit‘s comprehensive features also give developers the capacity to create both general-purpose and specialized chatbot tools using a full toolkit that is easily accessed under the Apache 2.0 license.

In addition to features like the use of trained models and a retrieval system, OpenChatKit lets chatbots execute a variety of tasks, including arithmetic problem-solving, narrative and code writing, and document summarization.

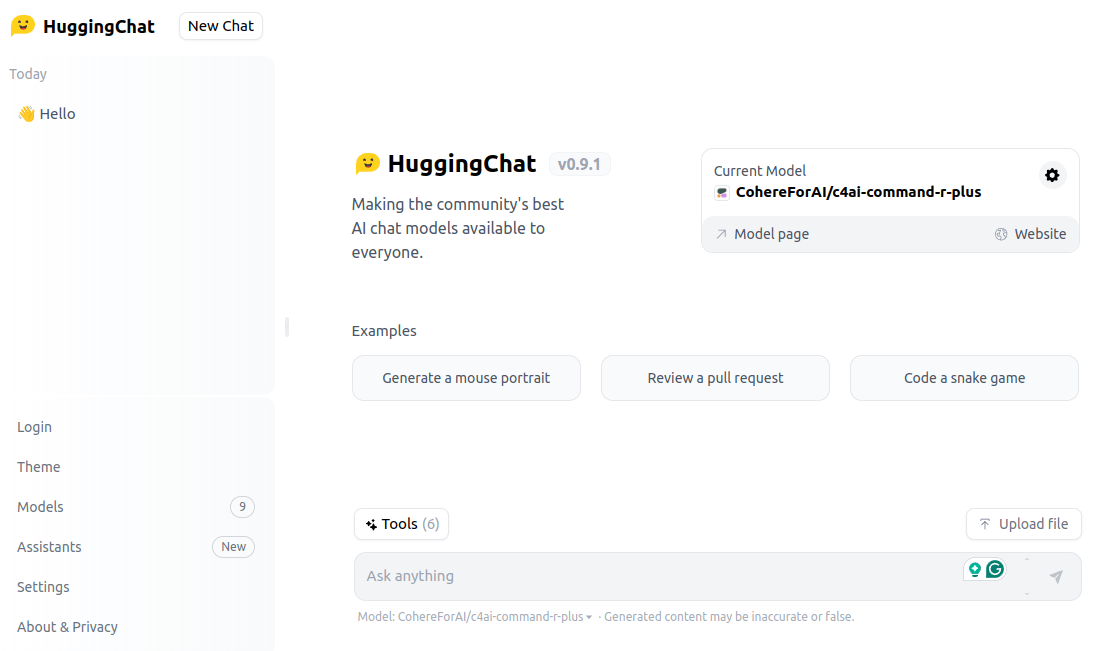

3. HuggingChat

HuggingChat is a comprehensive platform that features an extensive selection of cutting-edge open large language models (LLMs).

To guarantee anonymity by design, Hugging Face (HF) accounts are used for user authentication, as the conversations remain private and aren’t shared with anyone, including model authors.

For consistency in providing a broad selection of state-of-the-art LLMs, HuggingChat periodically changes these models, including Llama 2 70B, CodeLlama 35B, and Mistral 7B.

In addition, this tool offers a platform for users to engage in public discussions, offering insightful feedback and helping to shape its future.

4. Koala

Koala is a sophisticated chatbot, trained using discussion data from the internet to enhance Meta’s LLaMA. With its performance being compared to ChatGPT and Stanford’s Alpaca, this special model has undergone thorough dataset curation and training in extensive user research.

In over half of the situations, the outcomes show how good Koala is at answering a variety of customer inquiries, as it matches ChatGPT and frequently outshines Alpaca.

When trained on correctly obtained data, locally run chatbots can outperform their larger equivalents by using smaller public models like Koala.

5. Alpaca-LoRA

Alpaca-LoRA is an innovative project that uses low-rank adaptation (LoRA) to replicate Stanford Alpaca outcomes. To research consumer hardware, like Raspberry Pi this project offers a text-davinci-003-quality Instruct model that is to be used.

The code offers flexibility and scalability and can be readily extended to 13b, 30b, and 65b models. In addition to the generated LoRA weights, the project also provides a script for downloading and inferring the foundation model and LoRA.

Without the need for hyperparameter adjustment, the LoRA model exhibits similar results to the Stanford Alpaca model, demonstrating its efficacy and opening up possibilities for extra improvement through user testing and feedback.

6. ColossalChat

ColossalChat is at the forefront of open-source large AI model solutions, featuring a full RLHF pipeline that includes supervised data gathering, fine-tuning reward model training, and reinforcement learning based on the LLaMA pre-trained model. It shares a useful open-source project that closely resembles the original ChatGPT technological solution.

With its cutting-edge features, this platform offers an open-source 104K bilingual dataset in both Chinese and English, an interactive demo for online exploration without registration, and open-source RLHF training code for 7B and 13B models.

In addition, ColossalChat provides 4-bit quantized inference, for 7 billion-parameter models, making it accessible with low GPU memory needs.

Thanks to its RLHF fine-tuning feature, ColossalChat is bilingual in both English and Chinese, enabling a variety of features like general knowledge tests, email writing, algorithm development, and ChatGPT cloning methods.

To provide a high-performance, user-friendly conversational AI experience, this tool guarantees adaptability, effectiveness, and smooth integration by utilizing PyTorch.

7. Baize

Baize is an open-source chat model that was trained with LoRA and optimized for performance using 100k self-generated dialogs from ChatGPT and Alpaca’s data. The project has produced models 7B, 13B, and 30B as it aims to offer a complete chat model solution.

To strictly prohibit commercial use and only allow intended reasons purely for research, the model weights and code are made available under the GPL-3.0 license, as An important tool for the AI field, Baize provides a workable open-source project that consists of an entire RLHF method for emulating ChatGPT-like models.

For CLI and API usage, users may connect with Baize using Fastchat, offering a smooth experience for utilizing the model’s features. The project also provides an intuitive Gradio chat interface, CLI and API support, and a bilingual dataset.

8. Dolly v2

Dolly v2 is a significant language model developed by Databricks, Inc. and trained via the Databricks machine learning platform to adhere to instructions. The instruction-following model can be purchased in multiple sizes (12B, 7B, and 3B) and has a license for commercial use.

In addition, this tool can be optimized on a ~15K record instruction corpus created by Databricks personnel, and it’s also based on EleutherAI’s Pythia-12b. The aim of the model is to be used with the transformers library on GPU-equipped computers because of its excellent instruction-following ability.

With its detailed features, this tool is useful for language processing jobs, and since it is still a work-in-progress model, its performance and drawbacks are continuously being evaluated and enhanced.

9. Vicuna

Vicuna-13B is an open-source chatbot, refined using user-shared talks gathered from ShareGPT.

By exceeding other models such as LLaMA and Stanford Alpaca in over 90% of situations and surpassing OpenAI ChatGPT and Google Bard in quality, this tool has proven to be very competitive in performance.

The model provides an online demo for individuals to experience its first-hand capabilities, which are freely accessible to the public for non-commercial use.

One of Vicuna-13 B’s amazing features and strengths is its capacity to provide comprehensive and structured responses, especially when fine-tuned using 70K user-shared ChatGPT discussions.

10. ChatRWKV

ChatRWKV, an inventive chatbot powered by the RWKV (100% RNN) language model, provides an alternative to transformer-based models like ChatGPT.

Jumping right into its features, RWKV-6, the most recent version, is renowned for its quality and scaling, matching transformers, and using less VRAM while operating faster. The model can be used for non-commercial purposes with Stability EleutherAI as its sponsor.

ChatRWKV’s tailored feature is its capacity to produce excellent responses, especially true with the RWKV-6 version, which has proven to be a highly effective tool.

On top of that, the presence of RWKV Discord, a community with over 7,000 members, suggests that this technology has a healthy following, because of its open-source nature and cutting-edge resources available, making it a fantastic choice for developers and researchers interested in experiencing RNN-based language models for chatbots.

11. Cerebras-GPT

The Cerebras-GPT is a family of large language models (LLMs) developed by Cerebras Systems to aid in studying LLM scaling laws using open architectures and datasets.

Having been trained using Chinchilla scaling principles and including parameters ranging from 111M to 13B, these models are compute-optimal. On top of that, these models may be found on Hugging Face, and EleutherAI’s Pile dataset as used in their training.

Even though chatbot functionality isn’t addressed specifically, Cerebras-GPT models are meant to show off how easy and scalable it is to train LLMs using the Cerebras hardware and software stack. This implies that research and development should take precedence over using chatbots in the real world.

12. Open Assistant

OpenAssistant is a finished project that aims to make a high-quality chat-based large language model accessible. The project aims to elevate language itself, revolutionizing language innovation and making the world a better place.

This tool’s inclusive features include a chat frontend for real-time communication and a data gathering frontend for enhancing the assistant’s functionality, which OpenAssistant provides.

The release of the OpenAssistant Conversations (OASST1) corpus, highlights the democratization of large-scale alignment research. Moreover, HuggingFace hosts the final published oasst2 dataset at OpenAssistant/oasst2.

OpenAssistant’s models and code are available under the Apache 2.0 license as an open-source project, which permits a variety of usage, including for profit. The initiative is designed and managed by LAION, which includes a team of volunteers across the globe.

The project offers chances for participation in data collection and development for any individuals interested in contributing.

Conclusion

With the help of these cutting-edge resources, small businesses, researchers, and developers can take advantage of language-based technology and take on the biggest names in the market.

Even though they may not outperform GPT-4, it is clear that they have room to grow and improve with the help of the community. These models are particularly perfect substitutes for GPT-4.

AI is a security risk

@dragonmouth,

It’s true that AI presents certain security risks, but it’s also important to recognize that it offers significant benefits and can enhance security when used responsibly.

Ravi,

Your answer reminds me of something a politician would say. :-)

You mentioned “certain security risks“. When it comes to security, there’s no such thing as “acceptable“. You’re either secure or you’re not.

You also mentioned “significant benefits“. What benefits are these? Convenience? The cost of convenience is often privacy and security. Even before the widespread use of ‘smart devices‘, it was widely known that WiFi devices were susceptible to hacking.

Yet, the adoption of ‘smart devices‘ and ‘smart homes‘ skyrocketed. Anyone questioning the security of ‘smart‘ technology was often dismissed as anti-progress. It’s only recently that tech journals and blogs have started highlighting the vulnerabilities of ‘smart devices‘.

Similarly, AI is now the new shiny toy, and everyone is eager to jump on the bandwagon. Few are raising concerns, and unfortunately, those who do are often dismissed as Luddites.

You mentioned AI can “enhance security when used responsibly“. However, if something poses a security risk, how can it possibly “enhance security“? Phrases like “used responsibly” are empty and meaningless.

Currently, AI is akin to social networks. Despite efforts, platforms like Facebook, Instagram, and Google harvest personal data without adequate consent, which I find unacceptable.

Ravi, are you referring to charTgpt or ChatGPT?

@Venkatesh,

Sorry, it was a mistake. I was referring to ChatGPT. I have corrected the article.

Thanks for pointing it out!

Please include Ollama too. Ollama and the add-ons around it are great and easy to use.